Module 1: Introduction to Prompt Engineering

lightbulb What is Prompt Engineering?

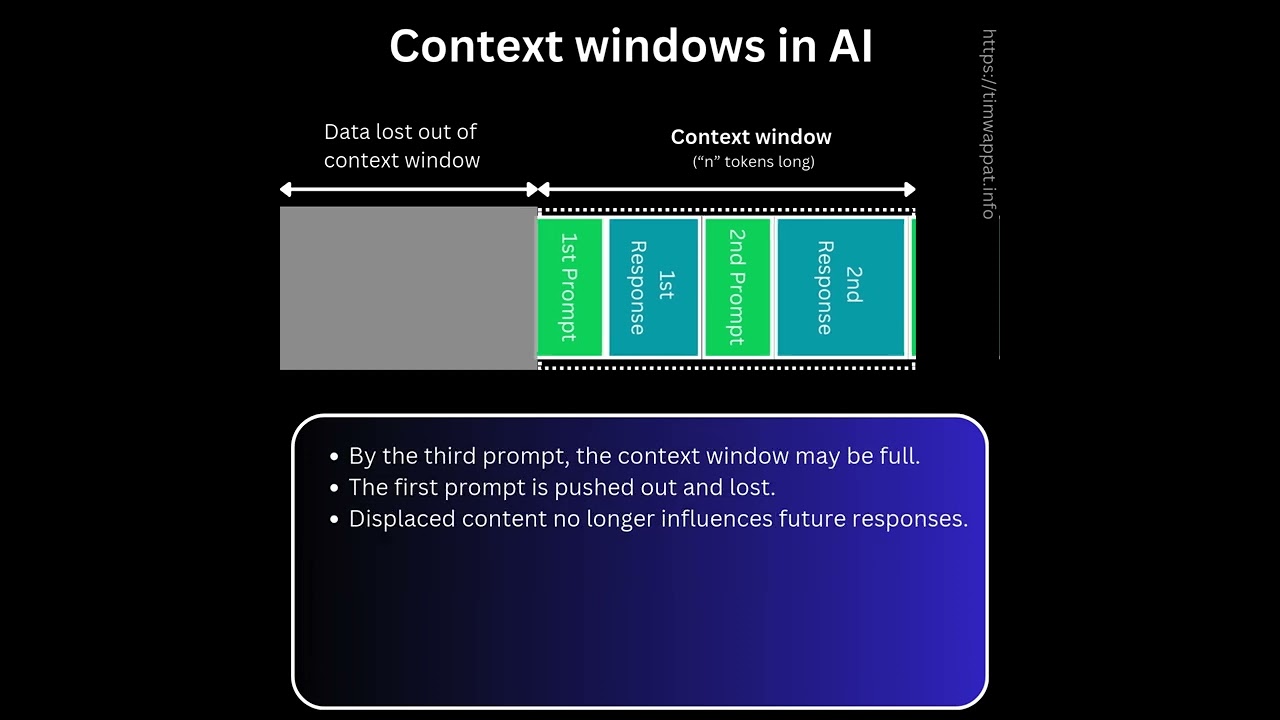

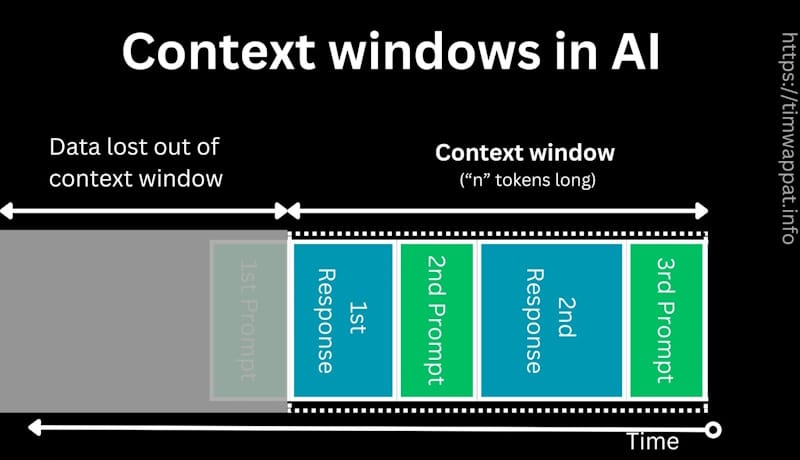

Definition: Crafting effective instructions to get optimal results from AI language models

Why it matters: Difference between generic responses and getting exactly what you need

psychology Core Principles

Vibe coding is prompting whatever you're doing

LLMs are "pretty stupid" and need clear, well-structured instructions

history Evolution of Prompting

Changed from early LLMs to current models

Good prompting reduces cost and complexity in AI systems

"The practice of crafting effective instructions to get optimal results from AI language models"